System Description

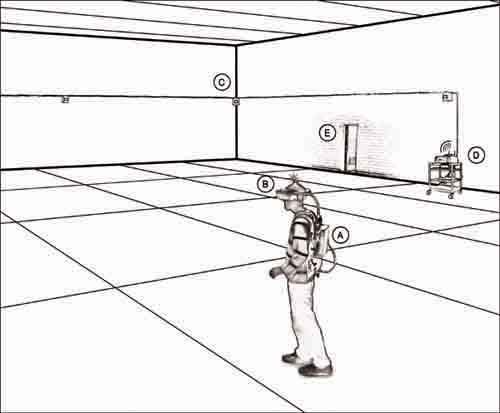

The HIVE is composed of several major sub-systems and components as depicted in Figure 1 below:

Figure 1: Huge Immersive Virtual Environment System.

- A wearable rendering system

- A head-mounted display and orientation tracking device

- Twelve infrared cameras

- A position processing system

- A control room for monitoring the VE state.

Current system

Figure 2: The wearable rendering system

A) Wearable Rendering System

Users of the HIVE are completely un-tethered and encumbered as little

as possible. HIVE users carry a portable rendering unit, orientation

sensor for head-tracking, a custom-built video control unit for

the HMD, and associated power supplies. The rendering computer,

video control unit (VCU), and power supplies are mounted to a small

backpack frame (see Figure 2 to the right). The total weight of the

backpack with a single battery for HMD power is approximately 9.8

kilograms.

B) Head Mounted Display (HMD) and Orientation Tracker

Immersion in the HIVE is achieved by

presenting computer generated images to participants in an NVIS

nVisor SX HMD. The unit is a light weight (1 kg), dual VGA frame-parallel

HMD. The HMD has a stereo display with a resolution of 1024 x 1280 for

each eye. Field of view is approximately 60° diagonal and 48° horizontal

with an angular resolution is 2.25 arc minutes per pixel. Overlap between

the displays is 100%. The dual reflective FLCOS (Ferroelectric Liquid-crystal-on-Silicon)

displays produce 24 bit true color with a contrast ratio of 200:1. Head

phones with frequency response ranging from 15 to 25,000 Hz are integrated

into the unit. Correct synch information and voltage must be supplied

to the HMD through a stereo video control unit (VCU).

C) Twelve Infrared Cameras

In general, optical

position tracking using triangulation can operate over longer distances

with less interference than alternative tracking technologies. HIVE

cameras (Figure 3) work optimally in cool ambient lighting (e.g., fluorescent,

mercury vapor lights, and sodium vapor lights). The use of twelve

cameras facilitates coverage of the large tracking area, increases

precision, and reduces possible problems related to occlusions.

Figure 3: A HIVE camera

D) Position Processing System

The HIVE uses the infrared Precision

Position Tracker (PPT X8) manufactured by WorldViz, LLC. It is capable

of tracking the position of up to eight markers (infrared LEDs emitting

light at 880 nm) simultaneously. The system updates position at 60 Hz;

the manufacturer indicates that the total latency for the tracker (including

RS232 communication) is 20 ms. For measurements within five meters of

the center of the HIVE, position tracking error averages 2.09 cm ± .005

(for a 95% confidence interval). 80% of the tracking area has an absolute

measurement error less than 7.49 cm, and overall RMS error is 6.68 cm.

Variability in postition estimates averages 0.44 cm ± .28 (for a 95% confidence

interval). 80% of the tracking area has a variability in position estimation

of less than 0.63 cm.

E) Control Room

The rendering and position-tracking computers

are controlled and monitored through a graphics workstation. This workstation

provides remote access to the computer worn by the user as well as to

another workstation dedicated to the position tracking system. It enables

researchers to monitor the visualizations that are displayed in the

user's HMD. The HIVE software supports monitoring by creating a cluster-based

network. This allows multiple viewpoints of a single virtual simulation

to be rendered across more than one computer. Low-level synchronization

of the VE state across machines is handled automatically over the Local Area Network via

UDP packets.

System Software

Currently, we use Unity 3D to support the HIVE. Unity provides a basic framework for quickly constructing real time 3D computer-simulated environments. With support for most standard 3D model and texture formats, visual content can be easily developed with third party modeling programs.

HIVE researchers have implemented a HIVE application programming interface (API). The primary purpose of this API is to standardize the way software interfaces with the various input devices of the HIVE and to speed the implementation of research and other applications of the HIVE. The standardization further speeds development of virtual worlds to provide specialized functions that support experimental work conducted in the HIVE.

Coming Soon

- XSens MOVEN inertial body tracking

- Multi-user support